Synthetic Humans are designed and built to work from any server. The javascript driven avatar operates client side with quality fully independent of hardware.

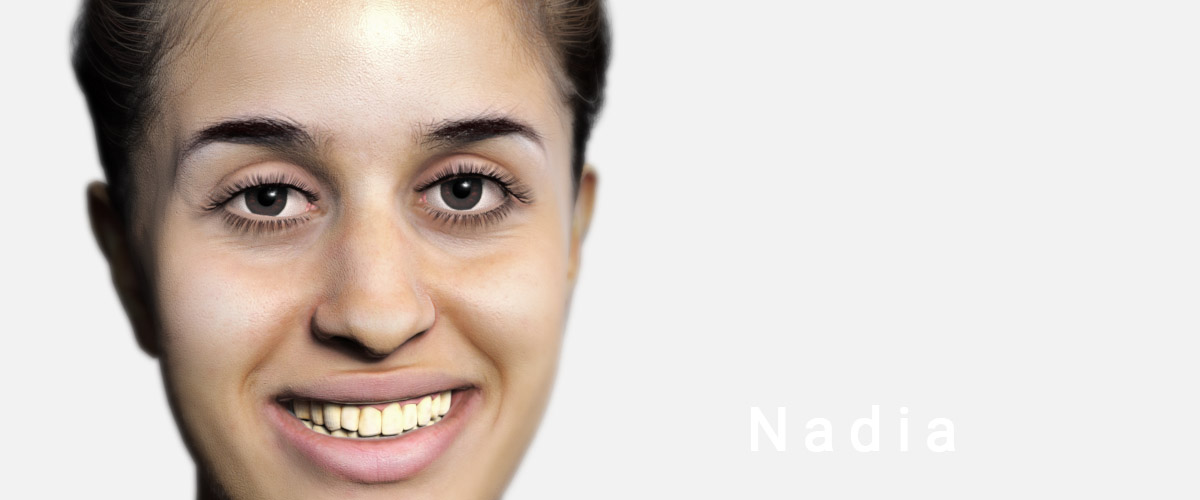

A selection of Synthetic Human avatars are available for lease as an 'off the shelf' alternative to a fully bespoke build

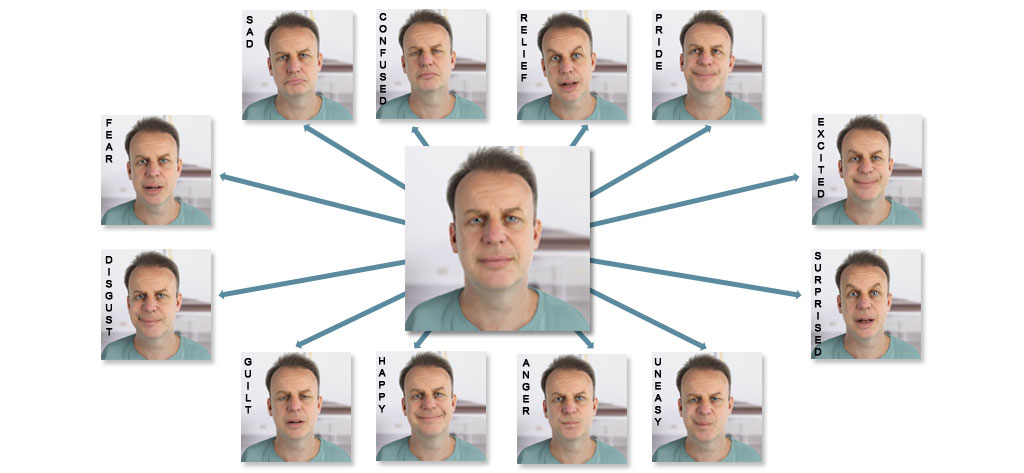

Synthetic Humans can display a variety of emotions drawn from real performances.

Synthetic Humans are built with lyp-synch functionality that is driven by viseme meta data from real time TTS services.

Synthetic Humans are built with over a dozen emotions ( for example: disgust, excited, angry, relief, happy and pride) that can be triggered by a simple javascript function call.

Synthetic Humans are pre-rendered. This means that their quality and level of realism is persistent over all delivery platforms and not influenced by end user hardware.

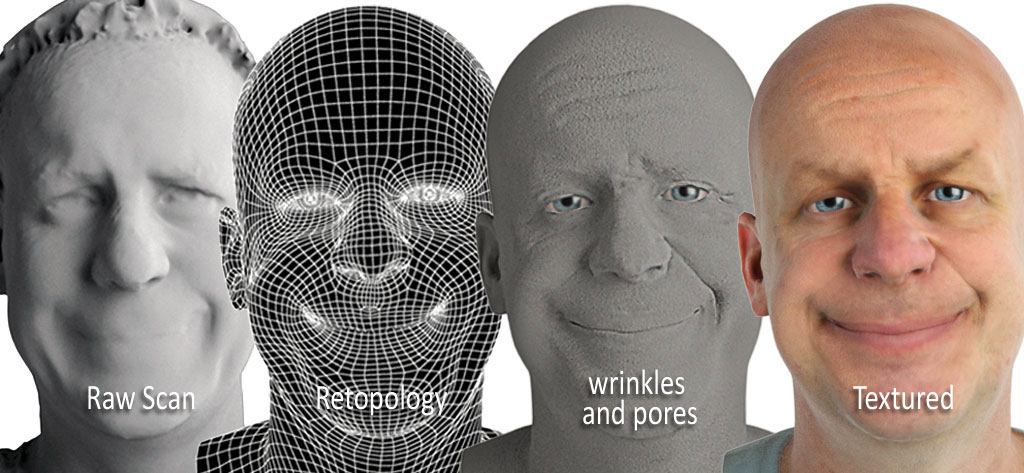

Synthetic Humans adopt the latest in 3D modelling and rendering techniques in order to provide the highest levels of realism. Each synthetic human build starts with the selection of model to be scanned for the production process.The chosen talent is scanned using a custom built multi camera rig designed specifically for scanning human heads. Dubbed 'The Italian Speedboat' due to it's construction from laminated wood and aluminium, this multi-camera rig is custom built specifically to focus on capturing multiple portrait images simultaneously and also to be portable enough to enable flexibility in the shoot process. Using canon DSLR cameras (18mpx for the main facial detail and 10mpx for more general detail) up to 72 images are captured for a neutral scan (whole head) and 24 images for any supporting FACS based scans.

The resulting scanned images are then used to create both meshes and textures for the final Synthetic Human.The images captured with the 'Italian speedboat' camera rig are then used to create accurate lifelike 3D models, From skin pores, wrinkles and skin tone through to individual FACS based blend targets for animation.

The Synthetic Human is then rigged for animation making use of FACS derived blend shapes and supporting textures.Over 60 blend shapes are then taken from the scanned subject and wired into the neutral scan to enable animation of the face. Along with the physical changes to the face; changes in skin tone, fine wrinkles and deformations are also captured and used to enhance the realsim of the animation.

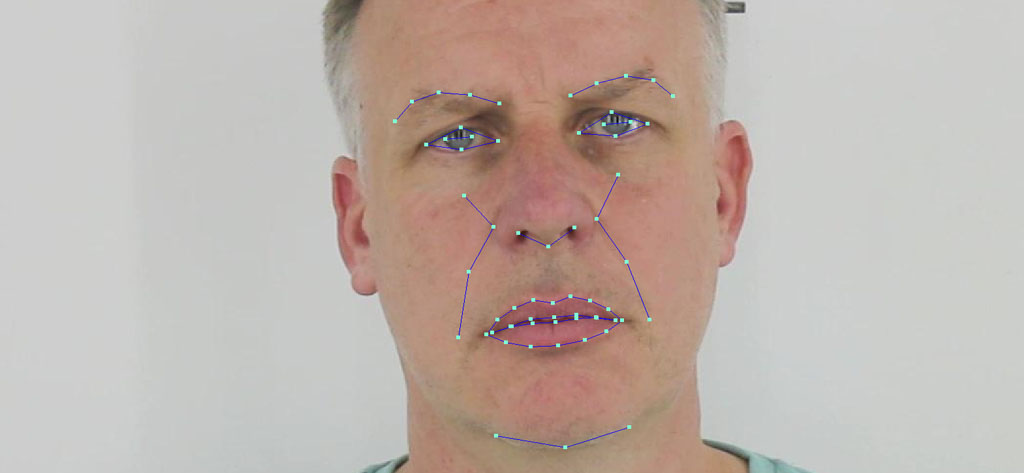

Animation is drawn from performance capture taken at the same time as the scan.Using high end video performance analysing software (used for AAA game and blockbuster movie production) realistic and lifelike emotion and movement is captured from actual performances and applied to the Synthetic Humans to bring them to life. Once the animation is applied the Synthetic Human is then rendered for integration into the cross platform player.

To breathe life into the Synthetic Humans they have an idle animation called 'neutral'. The neutral is randomly drawn from a number of discreet 'idle' animations covering actions such as blinks, subtle head movements and subtle facial movemements. Through over a decade of extensive research, practice and experience, the Synthetic Humans 'neutral' cycles have been polished to create a natural look without awkward movements or repetitiveness to break audience empathy.

Synthetic Humans are built with a selection of trigger-able emotive responses such as happy, sad, confused, excited and guilt. Each emotion is taken from performance capture enabling true to character believable emotes. As each Synthetic Human is unique the available emotes can be tailored and expanded on to suit each individual clients needs.

Utilising 15 viseme blend shapes scanned from the talent, realtime lip synch can be delivered to compliment 3rd party TTS services. The triggering of viseme shapes is drawn from the 3rd party service providers meta data for phonetic breakdown.

1. Sequence based randomised neutral animation.

2. Triggerable emotion sequences drawn from performance capture.

1. Sequence based randomised neutral animation.

2. Triggerable emotion sequences drawn from performance capture.

3. Triggerable visemes for lip synch functionality.

Jon is one of the faces for artelli 42's 'Human Behavioural Emulation System'.

Rachel was built to run in a webgl based player to serve as a face for a training system in the field of medicine

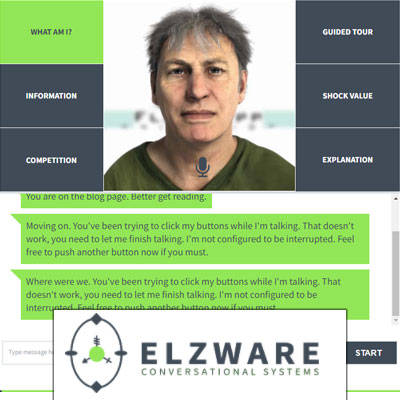

Rich was built from a bespoke scan and serves as the avatar for Elzware's conversational A.I.

A Bespoke 3D avatar acting as their online virtual assistant for the launch of their rebranding.

A Bespoke 3D avatar for Tesco Mobile's online virtual assistant to compliment a conversational A.I. system provided by Creative Virtual.

A Bespoke 3D avatar for M&S Money's online virtual assistant to compliment a conversational A.I. system provided by Creative Virtual.

A Bespoke 3D avatar for Atlas Consortium's internal virtual assistant to compliment a conversational A.I. system provided by Creative Virtual.

Flagshipp delivered fully animated characters for Altadyns' realtime '3Dmeet' software.

A selection of Bespoke 3D avatar for O2's online 'Guru's' built to compliment a conversational A.I. system provided by Creative Virtual.

A selection of bespoke 3D avatars built for HSBC Hong Kong to act as their online virtual assistants.

A Bespoke 3D avatar built to act as their online and offline virtual customer services.

A Bespoke 3D avatar for Allianz to use as their online virtual assistant.

A Bespoke 3D avatar for TalkTalk Mobile to use as their online virtual assistant.

A Bespoke 3D avatar for Virgin Media designed on an 'employee of the month' to use as their online virtual assistant.

A Bespoke 3D avatar for Renault to use as their online virtual assistant for the launch of the 'Twingo' range of cars.

A Bespoke 3D avatar for On the Beach to use as their online virtual assistant.

A Bespoke 3D avatar Photobox to use as part of their online marketing.